VGGNet

1) 개요

- Investigate the effect of the convolutional network depth on its accuracy.

- 2nd place at ILSVRC (1st : GoogLeNet). But, it is used alot more than GoogLeNet due to simple structure and generalize well to other dataset.

- Similar training procedure as AlexNet.

2) Depth (small filters, deeper networks)

- The depth of networks were shallow before 2014 ILSVRC. (less than 8 layers)

- VGGNet only used 3x3 kernel filter which is the smallest size to capture the notion of left/right, up/down, center)

- Stack of three 3x3 conv (stride 1) layers has same effective receptive filed as one 7x7 conv layer with fewer parameters.

- Deeper layers increase non-linearity -> easy to extract useful feature.

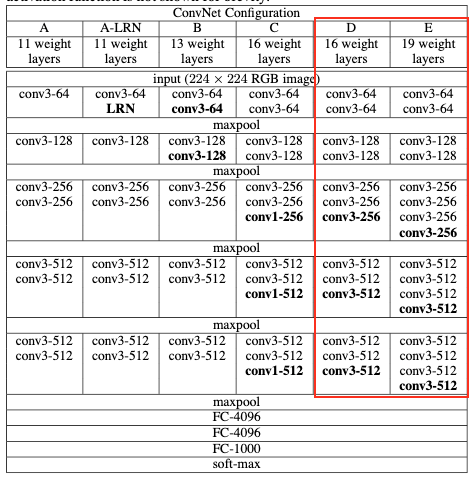

Architecture

1) Hyper-parameters

- Only preprocessing of image is subtracting the mean RGB value computed on the training set.

- 3x3 kernel filter stride 1, 2x2 max-pooling stride 2.

- ReLU for activation function.

- No use of LRN (Local Response Normalisation) -> doesn't improve the performance but the waste of computing power.

- mini-batch(batch size : 256) gradient descent with momentum 0.8.

- L2 regularisation 5*10^-4, learning rate 0.01 (decreased by 1/10 when the val set accuracy stopped improving.

2) Weight initialization

- Use VGG-A(11 layers) parameters to resolve vanishing/exploding gradient and reduce training time.

- First 4 conv layers and the last 3 fully-connected layers of net A weights are used.

3) Training

- 'S' be the training scale.

- Single scale (fixed S) : VGGNet used 2 single fixed scale (256, 384). Train the network using S = 256 and trained S = 384 with pre-trained weights with S = 256.

- Multi scale (min = 256, max = 512) : Each training image is indiviually rescaled by randomly sampling S.

- Objects in images can be of different size. -> scale jittering.

4) Testing

- Use ensembles for best results (Voting).

- Rescaled to a pre-defined smallest image side 'Q'

- Q isn't necessarily equal to the training scale S.

Results

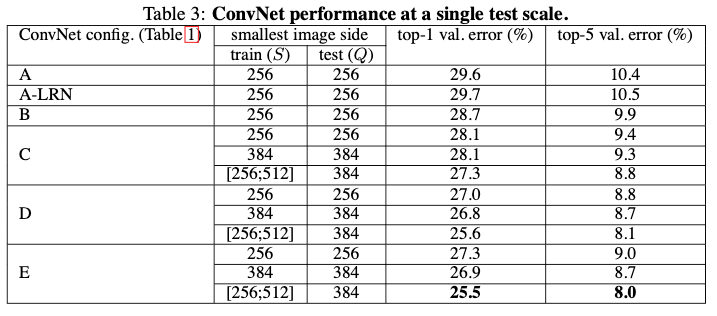

1) Single scale evaluation (no scale jittering for test)

- Using LRN doesn't improve on the model A without any normalization layers.

- Smaller filters with deeper nets get better results. (additional non-linearity does help)

- Scale jittering leads to significantly better results.

2) Multi scale evaluation

- scale jittering at test time leads to better performance.

- Large discrepancy between training and testing scales leads to a drop in performance.

3) Multi crop evaluation

- Using multiple crops performs slightly better than dense evaluation.

- Dense evaluation and muliple crops are complementary. -> better performance.

Ref.