AlexNet

1) 개요

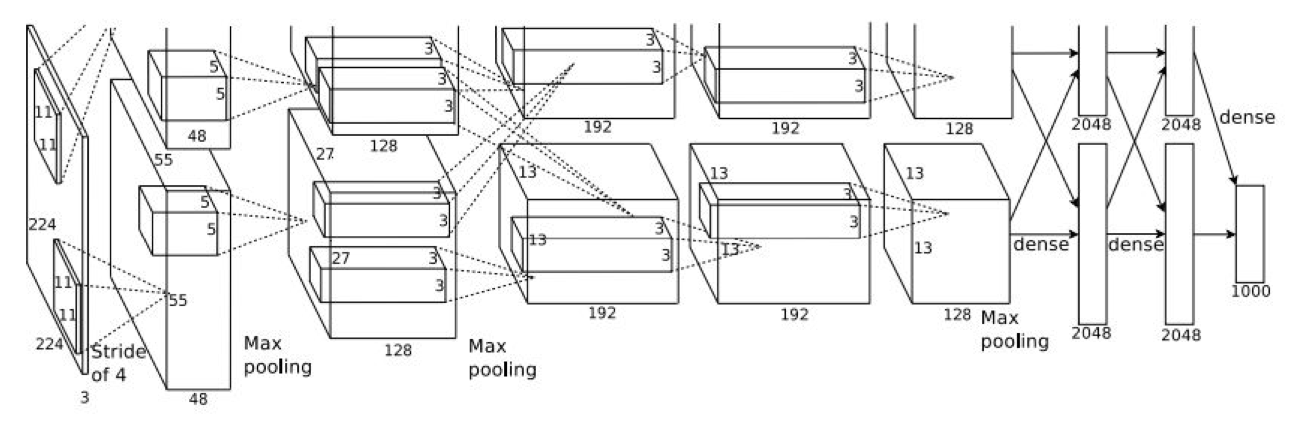

- 60 million parameters and 650,000 neurons

- Consists of 5 convolutional layers, some max-pooling layers, and 3 fully-connected layers.

- Use softmax for the classification.

- To make training faster, AlexNet used non-saturating neurons and a GPU.

- Use dropout to reduce overfitting in the fully-connected layers.

2) ReLU activation function

- LeNet-5 used Tanh function.

- AlexNet used ReLU function to prevent from overfitting.

3) Dropout

- AlexNet used dropout with probability 0.5.

4) Overlapping pooling

- AlexNet used Max pooling.

- By overlapping pooling, its output of different dimensions. More precise than non-overlapping pooling.

5) Local Response Normalization

- This layer is useful when we are dealing with ReLU neurons.

- The activated neuron do normalization to neighbour neurons. It will make stand out for the activated neruron. So, we can detect high frequency features with a large repsponse.

- In contrast, if all neurons are large, then normalizing will diminish all of them.

6) Data augmentation

- Artificially enlarge the dataset using label-preserving transformations.

- For computing power, they transformed the images on the CPU while the GPU is training.

(1) Crop original image by 224 x 224 size for train, horizontal reflection for test.

(2) Altering the intensities of the RGB channels in training images.

Architecture

1) GPU

- A single GPU which they used has only 3GB of memory. It limits the maximum size of the networks that can be trained.

- They spread the net across two GPUs

- the GPUs communicate only in certain layers (CONV3, FC6, FC7, FC8)

2) stride 4 for CONV1

- They reduced computation at CONV1 by applying stride 4. Because the input size is very big and to reduce it.

Ref.

blog.naver.com/laonple/220667260878

papers.nips.cc/paper/2012/file/c399862d3b9d6b76c8436e924a68c45b-Paper.pdf

cs231n.stanford.edu/slides/2020/lecture_9.pdf

prateekvjoshi.com/2016/04/05/what-is-local-response-normalization-in-convolutional-neural-networks/